Last year, Mariano had a proposal: let’s try to automatically deploy after successful testing on Travis. We never had anything like that before, all we had is a bunch of shell scripts that assisted the process. TL;DR: upgrading to CD level was easier than we thought, and we have introduced it for more and more client projects.

This is a deep dive into the journey we had, and where we are now. But if you’d like to jump right into the code, our Drupal Starter Kit has everything already wired in - so you can use everything yourself without too much effort.

Prerequisites

At the moment the site is assembled and tests are run on every push. To be able to perform a deployment as well, a few pre-conditions must be met:

- a solid hosting architecture, either a PaaS solution like Pantheon or Platform.sh, just to name a few that are popular in the Drupal world, or of course a custom Kubernetes cluster would do the job as well

- a well-defined way to execute a deployment

Let me elaborate a bit on these points. In our case, as we use a fully managed hosting, tailored to Drupal, a git push

is enough to perform a deployment. Doing that from a shell script from Travis is not especially complicated.

The second point is much trickier. It means that you must not rely on manual steps during the deployments.

That can be hard sometimes. For example while Drupal 8 manages the configuration well, sometimes there’s a need to alter the content right after the code change. The same is true when it comes to

translations - it can be tricky as well. For every single non-trivial thing that you’d like to manage, you need to think about a fully automated way, so Travis can execute it for you.

In our case, even when we did no deployments from Travis at all, we used semi-automatic shell scripts to do deployments by hand. Still, we had exceptions, so we prepared “deployment notes” from release to release.

Authorization & Authentication

What works from your computer, for instance pushing to a Git repository, won’t work from Travis or from an empty containerized environment. These days, whatever hosting you use, where you have the ability for password-less authentication, it’s via a public/private key pair, so you need a new one, dedicated to your new buddy, the deployment robot.

ssh-keygen -f deployment-robot-key -N "" -C "[email protected]"

Then you can add the public key to your hosting platform, so the key is authorized to perform deployments (if you raise the bar, even to the live environment). Ideally you should create a new dummy user on the hosting platform so it’s evident in the logs that a particular deployment comes from a robot, not from a human.

So what’s next? Copying the private key to the Git repository? I hope not, as you probably wouldn’t like to open a security issue and allow anyone to perform a deployment anytime, right? Likely not. Travis, as most of the CI platforms, provides a nice way to encrypt such things that are not for every coworker. So bootstrap the CLI tool and from the repository root, issue:

travis encrypt-file deployment-robot-key

Then follow the instructions on what to commit to the repository and what not.

Now you can deploy both from localhost and from Travis as well.

Old-Fashioned, Half-Automated Deployments

Let’s see a sample snippet from a project that has existed since 2007 for Drupal 7:

cd "$PANTHEON_DIR"

echo -e "${GREEN}Git commit new code.${NORMAL}\n"

git add . --all

echo -e "${YELLOW}Sleeping for 5 seconds, you can abort the process before push by hitting Ctrl-C.${NORMAL}\n"

git status

sleep 5

git commit -am "Site update from $ORIGIN_BRANCH"

git push

A little background to understand what’s going on above: For almost all the projects we have, there are two repositories. One is hosted on GitHub, has all the bells and whistles, the CI integration, all the side scripts and the whole source code, but typically not the compiled parts. Whereas, the Pantheon Git repository could be considered as an artefact repository, where all the compiled things are committed in, like the CSS from the SCSS. On that repo we also have some non-site related scripts.

So a human being sits in front of their computer, has the working copies of the two repositories, the script is able to deploy to the proper environment based on the branch. After the git push, it’s up to Pantheon to do the heavy lifting.

We would like to do the same, minus the part of having a human in middle of the process.

Satisfying the Prerequisites

All the developers (of that project) had various tools installed like Robo (natively or in that Docker container), the Pantheon repository was cloned locally, the SSH keys were configured and tested, but inside Travis, you have nothing more than just the working copy of the GitHub repository and an empty Ubuntu image.

We ended up with a few shell scripts, for instance ci-scripts/prepare_deploy.sh:

#!/bin/bash

set -e

cd "$TRAVIS_BUILD_DIR" || exit 1

# Make Git operations possible.

cp travis-key ~/.ssh/id_rsa

chmod 600 ~/.ssh/id_rsa

# Authenticate with Terminus.

ddev auth pantheon "$TERMINUS_TOKEN"

ssh-keyscan -p 2222 "$GIT_HOST" >> ~/.ssh/known_hosts

git clone "$PANTHEON_GIT_URL" .pantheon

# Make the DDEV container aware of your ssh.

ddev auth ssh

And another one that installs DDEV inside Travis.

That’s right, we heavily rely on DDEV for being able to use Drush and Terminus cleanly. Also it ensures

that what Travis does is identically replicable at localhost.

The trickiest part is the process of doing an ssh-keyscan before the cloning, otherwise it would complain about the

authenticity of the remote party. But how do you ensure the authenticity this way? One possible improvement is to use

https protocol, so the root certificates would provide some sort of check. For the record, it was a longer hassle to

figure out that the private key of our “robot” is exposed correctly, and the cloning fails because the known_hosts file is not yet populated.

Re-Shape the Travis Integration

Let’s say we’d like to deploy to the qa Pantheon environment every time master is updated.

First of all, only successful builds should be propagated for deployments. Travis offers

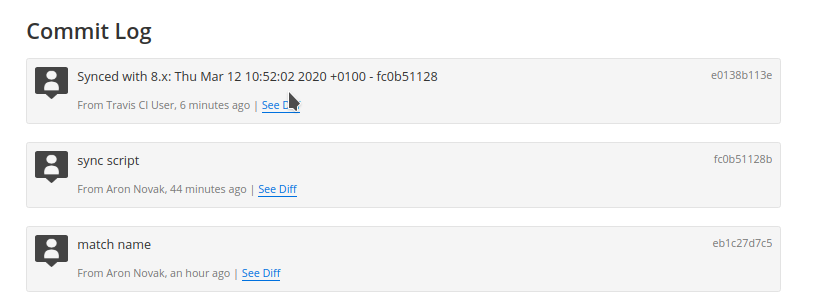

stages that we can use to accomplish that, so in practise, in our case: if linting fails, do not waste the time for the tests. If the tests fail, do not propagate the bogus state of the site to Pantheon. Here’s Drupal-starter’s travis.yaml file.

We execute linting first, for coding standard and for clean SCSS, then our beloved PHPUnit test follows, and finally we deploy to Pantheon for QA.

Machine-Friendly, Actualized Script

So what’s inside that Robo command in the end? More or less just the same as previously, the shell script became tidier and smarter, and after a while, we migrated to Robo to get managed command execution, formatted output and error handling out of the box. And also an important part is that all PHP devs can feel comfortable with that code, even if they are not Bash gurus. Here’s the deploy function. Then your new robot buddy can join the deployment party:

Do It Yourself

If you would like to try this out in action, just follow these steps - this is essentially how we set up a new client project these days:

- fork https://github.com/Gizra/drupal-starter into a new project

- install DDEV locally

- follow https://github.com/Gizra/drupal-starter#deploy-to-pantheon

- execute

ddev robo deploy:config-autodeploy- detailed in https://github.com/Gizra/drupal-starter#automatic-deployment-to-pantheon

Do You Need It?

Making Drupal deployments work in a fully automated way takes time. We invested about 80 hours polishing our deployment pipeline. But we estimate that we saved about 700 hours of deployment time with this tool. Should you invest that time? Dan North, in his talk Decisions, Decisions says

Do not automate something until it gets boring…

So, automate your deployments! But don’t rush until you learn how to do this perfectly. And if you decide to automate, we encourage you to build it on top of our work, and save yourself a lot of time!