The Tale of Managing the Managed Elasticsearch

Boost the user and developer experience of Drupal searches with Elasticsearch.

Drupal can be easily integrated with Apache Solr or Elasticsearch (ES in short) engines via the Search API module, this is the de-facto standard of integrating scalable search into a Drupal site; the core search module has serious limitations in terms of scalability and flexibility. The contributed modules only expose the basics of the capabilities of the engines, if you’d like to have sophisticated language handling, multiple environment handling and so on. It’s better to be familiar with how to customize it in a maintainable way. Elasticsearch became our favourite tool after working on the latest search-heavy projects. In short, it proved to be superior to Solr in just about any aspect.

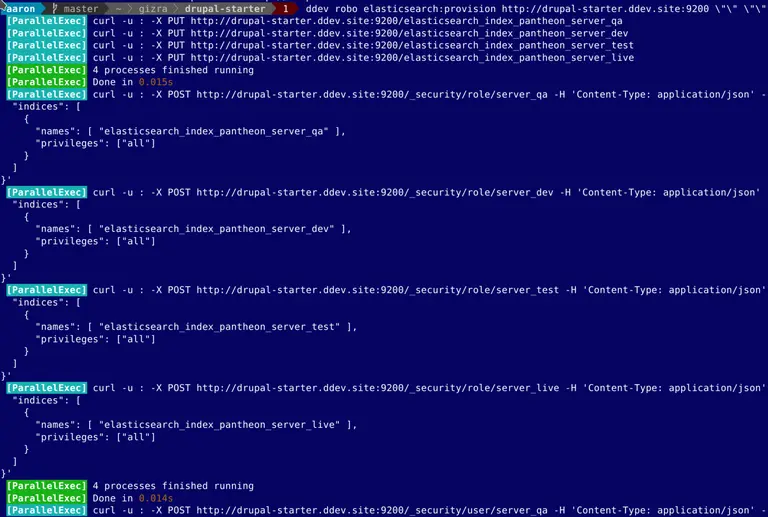

We have codified our knowledge into Drupal-Starter that comes bundled with Elasticsearch for local development as well as a Robo command to provision ES instances on hosting providers such as elastic.co.

Bootstrap Elasticsearch

You need an Elasticsearch instance either on your local machine or in the cloud. Unfortunately, hosting providers such as Pantheon seem to have dropped the ball when it comes to search functionality, and they only provide an outdated Solr instance. Luckily, getting managed instances isn’t too expensive, and we’ve already done the heavy lifting for you - so provisioning it shouldn’t be much more than running a single Robo command. You could obviously go with your own hosted solution, but chances are that you do not want to add Elasticsearch security advisories to your daily reading todo.

We use DDEV for all our local development, so the best is to follow the contrib recipe already available to

have a local ES instance in the most efficient way. Do not forget to match the version of ES (image: elasticsearch:7.6.1)

to the one that will be used in production.

In case of a native installation, you might find our Gist useful, that we used in the pre-DDEV times. The code in the Gist seems like a shell script that was converted to Robo and that’s right, but we get managed command execution, formatted output and error handling out of the box that will come in handy later.

So either way, now your local box has an instance up and running, and you can save a few dollars while still testing. Don’t hurry to submit that order form yet for your managed instance.

Provision Elasticsearch

To provide efficient search for your visitors, it’s not merely the question of using a great search engine and that’s all, that engine just gives you the power to fine-tune every detail. This blog post doesn’t aim to be a replacement for the ES documentation, we highlight three examples that are already in our starter kit.

Multiple Indices

We typically use at least four instances of the same website (QA, DEV, TEST and LIVE), so we’d like to create one index for each instance to keep the search data separated - without the need to have multiple Elasticsearch servers. We started to craft a RoboFile, that creates the indices automatically for us. This way the local ES can be nearly identical to the one which serves the production site. Using Elastic’s REST API, we create the indices, secure them with a password and configure them for sophisticated search.

Synonyms

It’s a linguistic and content architecture-related task to assemble a list of synonyms. When you search for one specific word, another word in the document will also give a result. For example:

CMS => Content Management System

This case is obviously helpful, the visitor can search by the acronym, but even if a blog post only uses the long form, it will be a hit. Assembling this list is likely a recurring task, adapting it to the search analytics - who searches what on the website with what kind of keywords. For us, what’s interesting is to bake it into the RoboFile. We set it up once and the local instance, production and all the environments will have the same configuration. Also with the RoboFile, the maintenance is solved, it can be used with subsequent executions to deploy the updates, so we try to make the Elasticsearch part idempotent.

Stop Words

In the very same way, Elasticsearch and our starter kit supports stop words, which is useful to make sure too generic words do not ruin ranking, or the result set.

Let’s say the search phrase is “the cloud” and all the node titles contain “the”, the relevant hit is the one with “cloud” only.

Integrate with Drupal

At this point, we have arrived at the only weak point of the whole ecosystem that we are faced with. The Elasticsearch Connector contrib module is slightly less maintained than Search API Solr. For instance, by the time of this blog post, only the latter has Drupal 9 support. On the other hand, we use it for a number of client projects without issues, but be prepared to forge some patches to achieve what you want. Above, we did lots of customizations via the RoboFile to our index, but the integration module throws it away when the index gets cleared, so we contributed a patch to preserve all the custom configuration. A few more patches were needed to get it working, and they are all part of our Drupal-Starter’s composer.json now. All in all, the developer-friendly REST API and the overall easier management persuaded us that, despite the above, we’re on the right track.

The Icing on the Cake

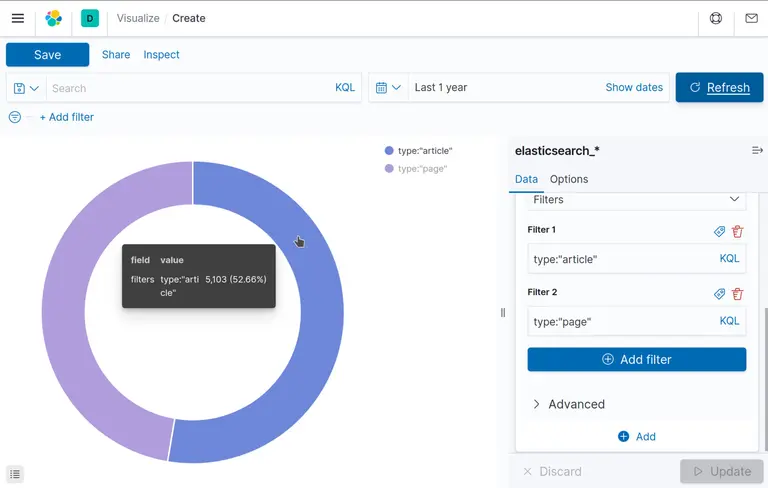

The ecosystem around the engine is appealing. When you have lots of data in the index, the analytical tools that

Kibana provides can be handy:

When thinking about the benefits, perhaps showcase Kibana to the client, imagine that you can put any entity in the index, so whatever data you have, it can be scrutinised with ease!

Áron Novák